As mentioned in my last post, I’ve always enjoyed designing activities for open and online learning. This post is about my favourite, an activity prepared more than 10 years ago for a course I wrote at the Open University of Hong Kong.  With the trendy title ‘Mining Information in the Internet Age’ (not my choice!), the course aimed to help learners develop their critical skills in using the internet.

With the trendy title ‘Mining Information in the Internet Age’ (not my choice!), the course aimed to help learners develop their critical skills in using the internet.

I was not on my own: a course team was formed (me the writer, a couple of academics and the Librarian), and in particular I was lucky enough to work with highly talented graphic designers and programmers. My half-baked ideas concerning web design and site functionality were transformed into an attractive and functional learning environment by these able colleagues.

Although we were stuck with WebCT (you may well remember the ghastly early interface), I was assured that they could modify it to something more appealing, and this they managed in spades. The example at the right shows the outcome: the page design was exactly what I was after, simple and intuitive – ‘breadcrumbs’ near the top, clear navigation on the left and useful links on the right. Audio outlines were also included, along with extra features and extension activities for those who were interested.

Although we were stuck with WebCT (you may well remember the ghastly early interface), I was assured that they could modify it to something more appealing, and this they managed in spades. The example at the right shows the outcome: the page design was exactly what I was after, simple and intuitive – ‘breadcrumbs’ near the top, clear navigation on the left and useful links on the right. Audio outlines were also included, along with extra features and extension activities for those who were interested.

It was a short course (up to about 30 hours of study) and the particular activity I’d like to highlight was in Unit 3. The aim was to provide a tool for assessing the quality of a web site and to enable the learners to try it out on a few examples. This would build up their critical skills to ensure that they didn’t accept online claims at face value.

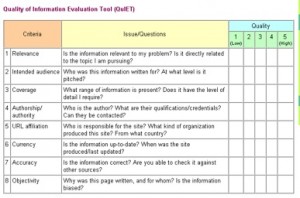

Thus we dreamt (dreamed?) up the QuIET – the Quality of Information Evaluation Tool. Eight criteria were identified for consideration in evaluating a web site: relevance, intended audience, coverage, authorship/authority, URL affiliation, currency, accuracy and objectivity. Specific questions were suggested for each criterion, and a 5-point scale added to enable an estimated measure of quality.

Thus we dreamt (dreamed?) up the QuIET – the Quality of Information Evaluation Tool. Eight criteria were identified for consideration in evaluating a web site: relevance, intended audience, coverage, authorship/authority, URL affiliation, currency, accuracy and objectivity. Specific questions were suggested for each criterion, and a 5-point scale added to enable an estimated measure of quality.

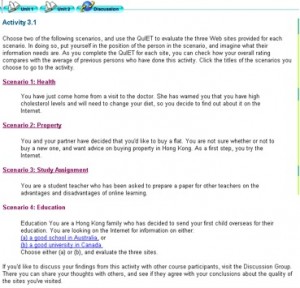

The activity required participants to use the tool directly to evaluate the quality of three websites for one of four possible scenarios.  The scenarios were deliberately chosen to appeal to our learners, as adult working Hong Kong residents: health, property, online learning and overseas education.

The scenarios were deliberately chosen to appeal to our learners, as adult working Hong Kong residents: health, property, online learning and overseas education.

By clicking on the scenario of their choice, participants were taken to a page that allowed them to use the evaluation tool to directly investigate and assess a web site. The three sites for each scenario were selected to illustrate how the quality of information provided can vary widely, depending on how they measure up to the criteria. For example, those for Canadian universities included both a government information site and a commercial recruitment agent.

Our web programmer did a lovely job for this part of the activity, with the QuIET tool at the side and each site to be analysed appearing in the lower right of the browser window. As each site was rated according to the tool, the next of the three sites would appear. When all three were rated, the learner’s score was listed, along with the average of all previous learners scores. This enabled the user to see how their analysis compared with their peers.

Our web programmer did a lovely job for this part of the activity, with the QuIET tool at the side and each site to be analysed appearing in the lower right of the browser window. As each site was rated according to the tool, the next of the three sites would appear. When all three were rated, the learner’s score was listed, along with the average of all previous learners scores. This enabled the user to see how their analysis compared with their peers.

Although we were pleased with the course, it was not particularly popular with students as a fee-paying offering. Undeterred, the university decided to make better use of it by offering it free to all staff and students. I was happy to see it work successfully for a few years before drifting into obsolescence.

Good work, you made this post interesting.

Website Development Company in India

Search Engine Optimization Company in India